Projectile motion: Difference between revisions

en>I dream of horses m Reverted edit(s) by 14.96.85.37 identified as test/vandalism using STiki |

No edit summary |

||

| Line 1: | Line 1: | ||

The '''light field''' is a function that describes the amount of [[light]] faring in every direction through every point in space. [[Michael Faraday]] was the first to propose (in an [[1846 in science|1846]] lecture entitled "Thoughts on Ray Vibrations") that light should be interpreted as a field, much like the magnetic fields on which he had been working for several years. The phrase ''light field'' was coined by Arun Gershun in a classic paper on the radiometric properties of light in three-dimensional space (1936). The phrase has been redefined by researchers in [[computer graphics]] to mean something slightly different.{{citation needed|date=December 2009}} | |||

==The 5D plenoptic function== | |||

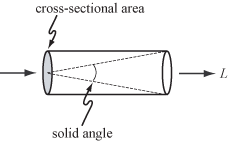

[[Image:Plenoptic-function-a.png|right|frame|Radiance ''L'' along a ray can be thought of as the amount of light traveling along all possible straight lines through a tube whose size is determined by its solid angle and cross-sectional area.]] | |||

If the concept is restricted to geometric [[optics]]—i.e., to incoherent light and to objects larger than the wavelength of light—then the fundamental carrier of light is a [[ray (optics)|ray]]. The measure for the amount of light faring along a ray is [[radiance]], denoted by ''L'' and measured in [[watt]]s ''(W)'' per [[steradian]] ''(sr)'' per meter squared ''(m<sup>2</sup>)''. The steradian is a measure of [[solid angle]], and meters squared are used here as a measure of cross-sectional area, as shown at right. | |||

[[Image:Plenoptic function b.svg|left|frame|Parameterizing a ray in [[Three-dimensional space|3D]] space by position ''(x,y,z)'' and direction ''(<math>\theta</math>,<math>\phi</math>)''.]] | |||

The radiance along all such rays in a region of three-dimensional space illuminated by an unchanging arrangement of lights is called the plenoptic function (Adelson 1991). The plenoptic illumination function is an idealized function used in [[computer vision]] and [[computer graphics]] to express the image of a scene from any possible viewing position at any viewing angle at any point in time. It is never actually used in practice computationally, but is conceptually useful in understanding other concepts in vision and graphics.{{citation needed|date=December 2011}} Since rays in space can be parameterized by three coordinates, ''x'', ''y'', and ''z'' and two angles <math>\theta</math> and <math>\phi</math>, as shown at left, it is a five-dimensional function, that is, a function over a five-dimensional [[manifold]] equivalent to the product of 3D [[Euclidean space]] and the [[2-sphere]]. | |||

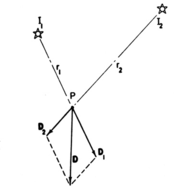

[[Image:Gershun-light-field-fig17.png|right|thumb|175px|Summing the irradiance vectors <math>D_1</math> and <math>D_2</math> arising from two light sources <math>I_1</math> and <math>I_2</math> produces a resultant vector <math>D</math> having the magnitude and direction shown (Gershun, fig 17).]] | |||

Like Adelson, Gershun defined the light field at each point in space as a 5D function. However, he treated it as an infinite collection of vectors, one per direction impinging on the point, with lengths proportional to their radiances. | |||

Integrating these vectors over any collection of lights, or over the entire sphere of directions, produces a single scalar value—the ''total irradiance'' at that point, and a resultant direction. The figure at right, reproduced from Gershun's paper, shows this calculation for the case of two light sources. In computer graphics, this vector-valued function of [[Three-dimensional space|3D space]] is called the ''vector irradiance field'' (Arvo, 1994). The vector direction at each point in the field can be interpreted as the orientation one would face a flat surface placed at that point to most brightly illuminate it. | |||

===Higher dimensionality=== | |||

One can consider time, [[wavelength]], and [[Polarization (waves)|polarization]] angle as additional variables, yielding higher-dimensional functions. | |||

==The 4D light field== | |||

[[Image:Plenoptic-function-c.png|left|frame|Radiance along a ray remains constant if there are no blockers.]] | |||

In a plenoptic function, if the region of interest contains a [[concave polygon|concave]] object (think of a cupped hand), then light leaving one point on the object may travel only a short distance before being blocked by another point on the object. No practical device could measure the function in such a region. | |||

However, if we restrict ourselves to locations outside the [[convex hull]] (think shrink-wrap) of the object, then we can measure the plenoptic function by taking many photos using a digital camera. Moreover, in this case the function contains redundant information, because the radiance along a ray remains constant from point to point along its length, as shown at left (with a proviso<ref>The radiance on a ray that hits the object is not equal to the radiance of the light coming from the object on the same ray but on the other side of the object.</ref>). In fact, the redundant information is exactly one dimension, leaving us with a four-dimensional function (that is, a function of points in a particular four-dimensional [[manifold]]), so long as we don't try to include both light rays coming in and hitting the object and light rays emanating from the object on the opposite side. Parry Moon dubbed this function the ''photic field'' (1981), while researchers in computer graphics call it the ''4D light field'' (Levoy 1996) or ''Lumigraph'' (Gortler 1996). Formally, the 4D light field is defined as radiance along rays in empty space. | |||

The set of rays in a light field can be parameterized in a variety of ways, a few of which are shown below. Of these, the most common is the two-plane parameterization shown at right (below). While this parameterization cannot represent all rays, for example rays parallel to the two planes if the planes are parallel to each other, it has the advantage of relating closely to the analytic geometry of perspective imaging. Indeed, a simple way to think about a two-plane light field is as a collection of perspective images of the ''st'' plane (and any objects that may lie astride or beyond it), each taken from an observer position on the ''uv'' plane. A light field parameterized this way is sometimes called a ''light slab''. | |||

Note that a light slab does ''not'' mean that the 4D light field is equivalent to capturing two 2D planes of information (this latter is only two dimensional). For example, a pair of points at position (0,0) in the ''st'' plane and (1,1) in the ''uv'' plane correspond to a ray in space, but other rays may pass through (0,0) in the ''st'' plane and through (1,1) in the ''uv'' plane—this pair of points correspond only to the one ray, not all these other rays. | |||

[[Image:Light-field-parameterizations.png|left|frame| Some alternative parameterizations of the 4D light field, which represents the flow of light through an empty region of three-dimensional space. Left: points on a plane or curved surface and directions leaving each point. Center: pairs of points on the surface of a sphere. Right: pairs of points on two planes in general (meaning any) position.]] | |||

{{clear}} | |||

===Sound analog=== | |||

The analog of the 4D light field for sound is the ''sound field'' or ''wave field,'' as in [[wave field synthesis]], and the corresponding parametrization is the Kirchhoff-Helmholtz integral, which states that, in the absence of obstacles, a sound field over time is given by the pressure on a plane. Thus this is two dimensions of information at any point in time, and over time a 3D field. | |||

This two-dimensionality, compared with the apparent four-dimensionality of light, is because light travels in rays (0D at a point in time, 1D over time), while by [[Huygens' Principle]], a sound [[wave front]] can be modeled as spherical waves (2D at a point in time, 3D over time): light moves in a single direction (2D of information), while sound simply expands in every direction. However, this distinction is not real, because light is also made of waves and a similar reduction in dimension could in principle be applied. | |||

{{clear}} | |||

==Ways to create light fields== | |||

Light fields are a fundamental representation for light. As such, there are as many ways of creating light fields as there are computer programs capable of creating images or instruments capable of capturing them. | |||

In computer graphics, light fields are typically produced either by [[rendering (computer graphics)|rendering]] a [[3D model]] or by photographing a real scene. In either case, to produce a light field views must be obtained for a large collection of viewpoints. Depending on the parameterization employed, this collection will typically span some portion of a line, circle, plane, sphere, or other shape, although unstructured collections of viewpoints are also possible (Buehler 2001). | |||

Devices for capturing [[light-field photography|light fields photographically]] may include a moving handheld camera or a robotically controlled camera (Levoy 2002), an arc of cameras (as in the [[bullet time]] effect used in ''[[The Matrix]]''), a dense array of cameras (Kanade 1998; Yang 2002; Wilburn 2005), handheld cameras ([[Ren Ng|Ng]] 2005; Georgiev 2006; Marwah 2013), microscopes (Levoy 2006), or other optical system (Bolles 1987). | |||

How many images should be in a light field? The largest known light field (of [http://graphics.stanford.edu/projects/mich/lightfield-of-night/ Michelangelo's statue of Night]) contains 24,000 1.3-megapixel images. At a deeper level, the answer depends on the application. For light field rendering (see the Application section below), if you want to walk completely around an opaque object, then of course you need to photograph its back side. Less obviously, if you want to walk close to the object, and the object lies astride the ''st'' plane, then you need images taken at finely spaced positions on the ''uv'' plane (in the two-plane parameterization shown above), which is now behind you, and these images need to have high spatial resolution. | |||

The number and arrangement of images in a light field, and the resolution of each image, are together called the "sampling" of the 4D light field. Analyses of ''light field sampling'' have been undertaken by many researchers; a good starting point is Chai (2000). Also of interest is Durand (2005) for the effects of occlusion, Ramamoorthi (2006) for the effects of lighting and reflection, and [[Ren Ng|Ng]] (2005) and Zwicker (2006) for applications to [[plenoptic camera]]s and 3D displays, respectively. | |||

==Applications of light fields== | |||

''Computational imaging'' refers to any image formation method that involves a digital computer. Many of these methods operate at visible wavelengths, and many of those produce light fields. As a result, listing all applications of light fields would require surveying all uses of computational imaging in art, science, engineering, and medicine. In computer graphics, some selected applications are: | |||

[[Image:Gershun-light-field-fig24.png|right|thumb|200px| A downward-facing light source (F-F') induces a light field whose irradiance vectors curve outwards. Using calculus, Gershun could compute the irradiance falling on points (<math>P_1, P_2</math>) on a surface. (Gershun, fig 24)]] | |||

* '''Illumination engineering.''' Gershun's reason for studying the light field was to derive (in closed form if possible) the illumination patterns that would be observed on surfaces due to light sources of various shapes positioned above these surface. An example is shown at right. A more modern study is (Ashdown 1993). | |||

* '''Light field rendering.''' By extracting appropriate 2D slices from the 4D light field of a scene, one can produce novel views of the scene (Levoy 1996; Gortler 1996). Depending on the parameterization of the light field and slices, these views might be [[Perspective projection|perspective]], [[Orthographic projection (geometry)|orthographic]], crossed-slit (Zomet 2003), general linear cameras (Yu and McMillan 2004), multi-perspective (Rademacher 1998), or another type of projection. Light field rendering is one form of [[Image-Based Modeling And Rendering|image-based rendering]]. | |||

* '''[[Synthetic aperture]] photography.''' By integrating an appropriate 4D subset of the samples in a light field, one can approximate the view that would be captured by a camera having a finite (i.e., non-pinhole) aperture. Such a view has a finite [[depth of field]]. By shearing or warping the light field before performing this integration, one can focus on different fronto-parallel (Isaksen 2000) or oblique (Vaish 2005) planes in the scene. If the light field is captured using a handheld camera ([[Ren Ng|Ng]] 2005), this essentially constitutes a digital camera whose photographs can be refocused after they are taken. | |||

<br clear="all" /> | |||

* '''3D display.''' By presenting a light field using technology that maps each sample to the appropriate ray in physical space, one obtains an [[autostereoscopy|autostereoscopic]] visual effect akin to viewing the original scene. Non-digital technologies for doing this include [[integral photography]], [[Volumetric display|parallax panoramagrams]], and [[holography]]; digital technologies include placing an array of lenslets over a high-resolution display screen, or projecting the imagery onto an array of lenslets using an array of video projectors. If the latter is combined with an array of video cameras, one can capture and display a time-varying light field. This essentially constitutes a [[3D television]] system (Javidi 2002; Matusik 2004). | |||

Image generation and predistortion of synthetic imagery for holographic stereograms is one of the earliest examples of computed light fields, anticipating and later motivating the geometry used in Levoy and Hanrahan's work (Halle 1991, 1994). | |||

Modern approaches to light field display explore co-designs of optical elements and compressive computation to achieve higher resolutions, increased contrast, wider fields of view, and other benefits (Wetzstein 2012, 2011; Lanman 2011, 2010). | |||

* '''Glare reduction.''' [[Glare (vision)|Glare]] arises due to multiple scattering of light inside the camera's body and lens optics and reduces image contrast. While glare has been analyzed in 2D image space (Talvala 2007), it is useful to identify it as a 4D ray-space phenomenon (Raskar 2008). By statistically analyzing the ray-space inside a camera, one can classify and remove glare artifacts. In ray-space, glare behaves as high frequency noise and can be reduced by outlier rejection. Such analysis can be performed by capturing the light field inside the camera, but it results in the loss of spatial resolution. Uniform and non-uniform ray sampling could be used to reduce glare without significantly compromising image resolution (Raskar 2008). | |||

==Notes== | |||

{{reflist}} | |||

==See also== | |||

* [[Angle-sensitive pixel]] | |||

* [[Lytro]] | |||

* [[Reflectance paper]] | |||

* [[Raytrix]] | |||

==References== | |||

===Theory=== | |||

* Adelson, E.H., Bergen, J.R. (1991). [http://web.mit.edu/persci/people/adelson/pub_pdfs/elements91.pdf#search=%22adelson%20plenoptic%20function%20elements%22 "The Plenoptic Function and the Elements of Early Vision"], In ''Computation Models of Visual Processing'', M. Landy and J.A. Movshon, eds., MIT Press, Cambridge, 1991, pp. 3–20. | |||

* Arvo, J. (1994). [http://portal.acm.org/citation.cfm?id=192250&coll=portal&dl=ACM&CFID=1089013&CFTOKEN=60772597 "The Irradiance Jacobian for Partially Occluded Polyhedral Sources"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 335–342. | |||

* Bolles, R.C., Baker, H. H., Marimont, D.H. (1987). [http://link.springer.com/article/10.1007/BF00128525# "Epipolar-Plane Image Analysis: An Approach to Determining Structure from Motion"], ''International Journal of Computer Vision'', Vol. 1, No. 1, 1987, Kluwer Academic Publishers, pp 7-55. | |||

* Faraday, M., [http://www.padrak.com/ine/FARADAY1.html "Thoughts on Ray Vibrations"], ''Philosophical Magazine'', S.3, Vol XXVIII, N188, May 1846. | |||

* Gershun, A. (1936). "The Light Field", Moscow, 1936. Translated by P. Moon and G. Timoshenko in ''Journal of Mathematics and Physics'', Vol. XVIII, MIT, 1939, pp. 51–151. | |||

* Gortler, S.J., Grzeszczuk, R., Szeliski, R., Cohen, M. (1996). [http://portal.acm.org/citation.cfm?id=237200 "The Lumigraph"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 43–54. | |||

* Levoy, M., Hanrahan, P. (1996). [http://graphics.stanford.edu/papers/light/ "Light Field Rendering"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 31–42. | |||

* Moon, P., Spencer, D.E. (1981). ''The Photic Field'', MIT Press. | |||

* [http://scripts.mit.edu/~raskar/lightfields/index.php?title=An_Introduction_to_The_Wigner_Distribution_in_Geometric_Optics Wigner Distribution Function and Light Fields] | |||

===Analysis=== | |||

* G. Wetzstein, I. Ihrke, W. Heidrich (2013) [http://www.cs.ubc.ca/labs/imager/tr/2010/TheoryOfPlenopticMultiplexing/PlenopticMultiplexing-IJCV2012.pdf "On Plenoptic Multiplexing and Reconstruction"], ''International Journal of Computer Vision (IJCV)'', Volume 101, Issue 2, pp. 384–400. | |||

* Ramamoorthi, R., Mahajan, D., Belhumeur, P. (2006). [http://www1.cs.columbia.edu/~ravir/papers/firstorder/index.html "A First Order Analysis of Lighting, Shading, and Shadows"], ''ACM TOG''. | |||

* Zwicker, M., Matusik, W., Durand, F., Pfister, H. (2006). [http://people.csail.mit.edu/wojciech/DispAntiAlias/ "Antialiasing for Automultiscopic 3D Displays"], ''Eurographics Symposium on Rendering, 2006''. | |||

* Ng, R. (2005). [http://graphics.stanford.edu/papers/fourierphoto/ "Fourier Slice Photography"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 735–744. | |||

* Durand, F., Holzschuch, N., Soler, C., Chan, E., Sillion, F. X. (2005). [http://people.csail.mit.edu/fredo/PUBLI/Fourier/ "A Frequency Analysis of Light Transport"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 1115–1126. | |||

* Chai, J.-X., Tong, X., Chan, S.-C., Shum, H. (2000). [http://graphics.cs.cmu.edu/projects/plenoptic-sampling/ps_projectpage.htm "Plenoptic Sampling"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 307–318. | |||

* Halle, M. (1994) [http://www.spl.harvard.edu/~halazar/pubs/discrete_spie94_preprint.pdf "Holographic Stereograms as Discrete imaging systems"], in ''SPIE Proc. Vol. #2176: Practical Holography VIII'', S.A. Benton, ed., pp. 73–84. | |||

* Yu, J., McMillan, L. (2004). [http://www.csbio.unc.edu/mcmillan/pubs/eccv2004_Yu.pdf "General Linear Cameras"], ''Proc. ECCV 2004'', Lecture Notes in Computer Science, pp. 14–27. | |||

===Light field cameras=== | |||

* Marwah, K., Wetzstein, G., Bando, Y., Raskar, R. (2013). [http://web.media.mit.edu/~gordonw/CompressiveLightFieldPhotography/ "Compressive Light Field Photography using Overcomplete Dictionaries and Optimized Projections"], ''ACM Transactions on Graphics (SIGGRAPH)''. | |||

* Liang, C.K., Lin, T.H., Wong, B.Y., Liu, C., Chen, H. H. (2008). [http://mpac.ee.ntu.edu.tw/~chiakai/pap/ "Programmable Aperture Photography:Multiplexed Light Field Acquisition"], ''Proc. ACM SIGGRAPH''. | |||

* Veeraraghavan, A., Raskar, R., Agrawal, A., Mohan, A., Tumblin, J. (2007). [http://web.media.mit.edu/~raskar/Mask/ "Dappled Photography: Mask Enhanced Cameras for Heterodyned Light Fields and Coded Aperture Refocusing"], ''Proc. ACM SIGGRAPH''. | |||

* Georgiev, T., Zheng, C., Nayar, S., Curless, B., Salesin, D., Intwala, C. (2006). [http://www.tgeorgiev.net/Spatioangular.pdf "Spatio-angular Resolution Trade-offs in Integral Photography"], ''Proc. EGSR 2006''. | |||

* Kanade, T., Saito, H., Vedula, S. (1998). [http://www.cs.cmu.edu/~virtualized-reality/3DRoom/TR.htm "The 3D Room: Digitizing Time-Varying 3D Events by Synchronized Multiple Video Streams"], Tech report CMU-RI-TR-98-34, December 1998. | |||

* Levoy, M. (2002). [http://graphics.stanford.edu/projects/gantry/ Stanford Spherical Gantry]. | |||

* Levoy, M., Ng, R., Adams, A., Footer, M., Horowitz, M. (2006). [http://graphics.stanford.edu/papers/lfmicroscope/ "Light Field Microscopy"], ''ACM Transactions on Graphics'' (Proc. SIGGRAPH), Vol. 25, No. 3. | |||

* Ng, R., Levoy, M., Brédif, M., Duval, G., Horowitz, M., Hanrahan, P. (2005). [http://graphics.stanford.edu/papers/lfcamera/ "Light Field Photography with a Hand-Held Plenoptic Camera"], ''Stanford Tech Report'' CTSR 2005-02, April, 2005. | |||

* Wilburn, B., Joshi, N., Vaish, V., Talvala, E., Antunez, E., Barth, A., Adams, A., Levoy, M., Horowitz, M. (2005). [http://graphics.stanford.edu/papers/CameraArray/ "High Performance Imaging Using Large Camera Arrays"], ''ACM Transactions on Graphics'' (Proc. SIGGRAPH), Vol. 24, No. 3, pp. 765–776. | |||

* Yang, J.C., Everett, M., Buehler, C., McMillan, L. (2002). [http://portal.acm.org/citation.cfm?id=581907&dl=ACM&coll=&CFID=15151515&CFTOKEN=6184618 "A Real-Time Distributed Light Field Camera"], ''Proc. Eurographics Rendering Workshop 2002''. | |||

* [http://www.cafadis.ull.es "The CAFADIS camera"] | |||

===Light field displays=== | |||

* Wetzstein, G., Lanman, D., Hirsch, M., Raskar, R. (2012). [http://web.media.mit.edu/%7Egordonw/TensorDisplays/TensorDisplays.pdf "Tensor Displays: Compressive Light Field Display using Multilayer Displays with Directional Backlighting"], '' ACM Transactions on Graphics (SIGGRAPH)'' | |||

* Wetzstein, G., Lanman, D., Heidrich, W., Raskar, R. (2011). [http://www.cs.ubc.ca/labs/imager/tr/2011/Wetzstein_SIG2011_Layered3D/TomographicLightFieldSynthesis.pdf "Layered 3D: Tomographic Image Synthesis for Attenuation-based Light Field and High Dynamic Range Displays"], '' ACM Transactions on Graphics (SIGGRAPH)'' | |||

* Lanman, D., Wetzstein, G., Hirsch, M., Heidrich, W., Raskar, R. (2011). [http://alumni.media.mit.edu/~dlanman/research/polarization-fields/polarization-fields.pdf "Polarization Fields: Dynamic Light Field Display using Multi-Layer LCDs"], ''ACM Transactions on Graphics (SIGGRAPH Asia)'' | |||

* Lanman, D., Hirsch, M. Kim, Y., Raskar, R. (2010). [http://web.media.mit.edu/~mhirsch/hr3d/content-adaptive.pdf "HR3D: Glasses-free 3D Display using Dual-stacked LCDs High-Rank 3D Display using Content-Adaptive Parallax Barriers"], '' ACM Transactions on Graphics (SIGGRAPH Asia)'' | |||

* Matusik, W., Pfister, H. (2004). [http://portal.acm.org/citation.cfm?id=1015805&dl=ACM&coll=&CFID=15151515&CFTOKEN=6184618 "3D TV: A Scalable System for Real-Time Acquisition, Transmission, and Autostereoscopic Display of Dynamic Scenes"], ''Proc. ACM SIGGRAPH'', ACM Press. | |||

* Javidi, B., Okano, F., eds. (2002). ''Three-Dimensional Television, Video and Display Technologies'', Springer-Verlag. | |||

===Light field archives=== | |||

* [http://lightfield.stanford.edu/ "The Stanford Light Field Archive"] | |||

* [http://vision.ucsd.edu/datasets/lfarchive/ "UCSD/MERL Light Field Repository"] | |||

* [http://hci.iwr.uni-heidelberg.de/HCI/Research/LightField/lf_benchmark.php "The HCI Light Field Benchmark"] | |||

* [http://web.media.mit.edu/~gordonw/SyntheticLightFields/index.php "Synthetic Light Field Archive"] | |||

===Applications=== | |||

* Heide, F., Wetzstein, G., Raskar, R., Heidrich, W. (2013) [http://www.adaptiveimagesynthesis.com/ "Adaptive Image Synthesis for Compressive Displays"], ACM Transactions on Graphics (SIGGRAPH) | |||

* Wetzstein, G., Raskar, R., Heidrich, W. (2011) [http://www.cs.ubc.ca/labs/imager/tr/2011/LFBOS/index.html "Hand-Held Schlieren Photography with Light Field Probes"], IEEE International Conference on Computational Photography (ICCP) | |||

* Pérez, F., Marichal, J. G., Rodriguez, J.M. (2008). [http://www.eurasip.org/Proceedings/Eusipco/Eusipco2008/papers/1569101893.pdf "The Discrete Focal Stack Transform"], ''Proc. EUSIPCO'' | |||

* Raskar, R., Agrawal, A., Wilson, C., Veeraraghavan, A. (2008). [http://www.merl.com/people/agrawal/sig08/index.html "Glare Aware Photography: 4D Ray Sampling for Reducing Glare Effects of Camera Lenses"], ''Proc. ACM SIGGRAPH.'' | |||

* Talvala, E-V., Adams, A., Horowitz, M., Levoy, M. (2007). [http://graphics.stanford.edu/papers/glare_removal/ "Veiling Glare in High Dynamic Range Imaging"], ''Proc. ACM SIGGRAPH.'' | |||

* Halle, M., Benton, S., Klug, M., Underkoffler, J. (1991). [http://www.spl.harvard.edu/~halazar/pubs/ultragram_spie91_preprint.pdf "The UltraGram: A Generalized Holographic Stereogram"], ''SPIE Vol. 1461, Practical Holography V'', S.A. Benton, ed., pp. 142–155. | |||

* Zomet, A., Feldman, D., Peleg, S., Weinshall, D. (2003). [http://www2.computer.org/portal/web/csdl/doi/10.1109/TPAMI.2003.1201823 "Mosaicing New Views: The Crossed-Slits Projection"], ''IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI)'', Vol. 25, No. 6, June 2003, pp. 741–754. | |||

* Vaish, V., Garg, G., Talvala, E., Antunez, E., Wilburn, B., Horowitz, M., Levoy, M. (2005). [http://portal.acm.org/citation.cfm?id=1099539.1100041&coll=&dl=GUIDE&CFID=15151515&CFTOKEN=6184618 "Synthetic Aperture Focusing using a Shear-Warp Factorization of the Viewing Transform"], ''Proc. Workshop on Advanced 3D Imaging for Safety and Security'', in conjunction with CVPR 2005. | |||

* Rademacher, P., Bishop, G. (1998). [http://portal.acm.org/citation.cfm?id=280871&coll=portal&dl=ACM "Multiple-Center-of-Projection Images"], ''Proc. ACM SIGGRAPH'', ACM Press. | |||

* Isaksen, A., McMillan, L., Gortler, S.J. (2000). [http://portal.acm.org/citation.cfm?coll=GUIDE&dl=GUIDE&id=344929 "Dynamically Reparameterized Light Fields"], ''Proc. ACM SIGGRAPH'', ACM Press, pp. 297–306. | |||

* Buehler, C., Bosse, M., McMillan, L., Gortler, S., Cohen, M. (2001). [http://portal.acm.org/citation.cfm?id=383309&dl=ACM&coll=&CFID=15151515&CFTOKEN=6184618 "Unstructured Lumigraph Rendering"], ''Proc. ACM SIGGRAPH'', ACM Press. | |||

* Ashdown, I. (1993). [http://citeseer.ist.psu.edu/ashdown92nearfield.html "Near-Field Photometry: A New Approach"], ''Journal of the Illuminating Engineering Society'', Vol. 22, No. 1, Winter, 1993, pp. 163–180. | |||

[[Category:Optics]] | |||

Revision as of 13:00, 30 December 2013

The light field is a function that describes the amount of light faring in every direction through every point in space. Michael Faraday was the first to propose (in an 1846 lecture entitled "Thoughts on Ray Vibrations") that light should be interpreted as a field, much like the magnetic fields on which he had been working for several years. The phrase light field was coined by Arun Gershun in a classic paper on the radiometric properties of light in three-dimensional space (1936). The phrase has been redefined by researchers in computer graphics to mean something slightly different.Potter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park.

The 5D plenoptic function

If the concept is restricted to geometric optics—i.e., to incoherent light and to objects larger than the wavelength of light—then the fundamental carrier of light is a ray. The measure for the amount of light faring along a ray is radiance, denoted by L and measured in watts (W) per steradian (sr) per meter squared (m2). The steradian is a measure of solid angle, and meters squared are used here as a measure of cross-sectional area, as shown at right.

The radiance along all such rays in a region of three-dimensional space illuminated by an unchanging arrangement of lights is called the plenoptic function (Adelson 1991). The plenoptic illumination function is an idealized function used in computer vision and computer graphics to express the image of a scene from any possible viewing position at any viewing angle at any point in time. It is never actually used in practice computationally, but is conceptually useful in understanding other concepts in vision and graphics.Potter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park. Since rays in space can be parameterized by three coordinates, x, y, and z and two angles and , as shown at left, it is a five-dimensional function, that is, a function over a five-dimensional manifold equivalent to the product of 3D Euclidean space and the 2-sphere.

Like Adelson, Gershun defined the light field at each point in space as a 5D function. However, he treated it as an infinite collection of vectors, one per direction impinging on the point, with lengths proportional to their radiances.

Integrating these vectors over any collection of lights, or over the entire sphere of directions, produces a single scalar value—the total irradiance at that point, and a resultant direction. The figure at right, reproduced from Gershun's paper, shows this calculation for the case of two light sources. In computer graphics, this vector-valued function of 3D space is called the vector irradiance field (Arvo, 1994). The vector direction at each point in the field can be interpreted as the orientation one would face a flat surface placed at that point to most brightly illuminate it.

Higher dimensionality

One can consider time, wavelength, and polarization angle as additional variables, yielding higher-dimensional functions.

The 4D light field

In a plenoptic function, if the region of interest contains a concave object (think of a cupped hand), then light leaving one point on the object may travel only a short distance before being blocked by another point on the object. No practical device could measure the function in such a region.

However, if we restrict ourselves to locations outside the convex hull (think shrink-wrap) of the object, then we can measure the plenoptic function by taking many photos using a digital camera. Moreover, in this case the function contains redundant information, because the radiance along a ray remains constant from point to point along its length, as shown at left (with a proviso[1]). In fact, the redundant information is exactly one dimension, leaving us with a four-dimensional function (that is, a function of points in a particular four-dimensional manifold), so long as we don't try to include both light rays coming in and hitting the object and light rays emanating from the object on the opposite side. Parry Moon dubbed this function the photic field (1981), while researchers in computer graphics call it the 4D light field (Levoy 1996) or Lumigraph (Gortler 1996). Formally, the 4D light field is defined as radiance along rays in empty space.

The set of rays in a light field can be parameterized in a variety of ways, a few of which are shown below. Of these, the most common is the two-plane parameterization shown at right (below). While this parameterization cannot represent all rays, for example rays parallel to the two planes if the planes are parallel to each other, it has the advantage of relating closely to the analytic geometry of perspective imaging. Indeed, a simple way to think about a two-plane light field is as a collection of perspective images of the st plane (and any objects that may lie astride or beyond it), each taken from an observer position on the uv plane. A light field parameterized this way is sometimes called a light slab.

Note that a light slab does not mean that the 4D light field is equivalent to capturing two 2D planes of information (this latter is only two dimensional). For example, a pair of points at position (0,0) in the st plane and (1,1) in the uv plane correspond to a ray in space, but other rays may pass through (0,0) in the st plane and through (1,1) in the uv plane—this pair of points correspond only to the one ray, not all these other rays.

50 year old Petroleum Engineer Kull from Dawson Creek, spends time with interests such as house brewing, property developers in singapore condo launch and camping. Discovers the beauty in planing a trip to places around the entire world, recently only coming back from .

Sound analog

The analog of the 4D light field for sound is the sound field or wave field, as in wave field synthesis, and the corresponding parametrization is the Kirchhoff-Helmholtz integral, which states that, in the absence of obstacles, a sound field over time is given by the pressure on a plane. Thus this is two dimensions of information at any point in time, and over time a 3D field.

This two-dimensionality, compared with the apparent four-dimensionality of light, is because light travels in rays (0D at a point in time, 1D over time), while by Huygens' Principle, a sound wave front can be modeled as spherical waves (2D at a point in time, 3D over time): light moves in a single direction (2D of information), while sound simply expands in every direction. However, this distinction is not real, because light is also made of waves and a similar reduction in dimension could in principle be applied.

50 year old Petroleum Engineer Kull from Dawson Creek, spends time with interests such as house brewing, property developers in singapore condo launch and camping. Discovers the beauty in planing a trip to places around the entire world, recently only coming back from .

Ways to create light fields

Light fields are a fundamental representation for light. As such, there are as many ways of creating light fields as there are computer programs capable of creating images or instruments capable of capturing them.

In computer graphics, light fields are typically produced either by rendering a 3D model or by photographing a real scene. In either case, to produce a light field views must be obtained for a large collection of viewpoints. Depending on the parameterization employed, this collection will typically span some portion of a line, circle, plane, sphere, or other shape, although unstructured collections of viewpoints are also possible (Buehler 2001).

Devices for capturing light fields photographically may include a moving handheld camera or a robotically controlled camera (Levoy 2002), an arc of cameras (as in the bullet time effect used in The Matrix), a dense array of cameras (Kanade 1998; Yang 2002; Wilburn 2005), handheld cameras (Ng 2005; Georgiev 2006; Marwah 2013), microscopes (Levoy 2006), or other optical system (Bolles 1987).

How many images should be in a light field? The largest known light field (of Michelangelo's statue of Night) contains 24,000 1.3-megapixel images. At a deeper level, the answer depends on the application. For light field rendering (see the Application section below), if you want to walk completely around an opaque object, then of course you need to photograph its back side. Less obviously, if you want to walk close to the object, and the object lies astride the st plane, then you need images taken at finely spaced positions on the uv plane (in the two-plane parameterization shown above), which is now behind you, and these images need to have high spatial resolution.

The number and arrangement of images in a light field, and the resolution of each image, are together called the "sampling" of the 4D light field. Analyses of light field sampling have been undertaken by many researchers; a good starting point is Chai (2000). Also of interest is Durand (2005) for the effects of occlusion, Ramamoorthi (2006) for the effects of lighting and reflection, and Ng (2005) and Zwicker (2006) for applications to plenoptic cameras and 3D displays, respectively.

Applications of light fields

Computational imaging refers to any image formation method that involves a digital computer. Many of these methods operate at visible wavelengths, and many of those produce light fields. As a result, listing all applications of light fields would require surveying all uses of computational imaging in art, science, engineering, and medicine. In computer graphics, some selected applications are:

- Illumination engineering. Gershun's reason for studying the light field was to derive (in closed form if possible) the illumination patterns that would be observed on surfaces due to light sources of various shapes positioned above these surface. An example is shown at right. A more modern study is (Ashdown 1993).

- Light field rendering. By extracting appropriate 2D slices from the 4D light field of a scene, one can produce novel views of the scene (Levoy 1996; Gortler 1996). Depending on the parameterization of the light field and slices, these views might be perspective, orthographic, crossed-slit (Zomet 2003), general linear cameras (Yu and McMillan 2004), multi-perspective (Rademacher 1998), or another type of projection. Light field rendering is one form of image-based rendering.

- Synthetic aperture photography. By integrating an appropriate 4D subset of the samples in a light field, one can approximate the view that would be captured by a camera having a finite (i.e., non-pinhole) aperture. Such a view has a finite depth of field. By shearing or warping the light field before performing this integration, one can focus on different fronto-parallel (Isaksen 2000) or oblique (Vaish 2005) planes in the scene. If the light field is captured using a handheld camera (Ng 2005), this essentially constitutes a digital camera whose photographs can be refocused after they are taken.

- 3D display. By presenting a light field using technology that maps each sample to the appropriate ray in physical space, one obtains an autostereoscopic visual effect akin to viewing the original scene. Non-digital technologies for doing this include integral photography, parallax panoramagrams, and holography; digital technologies include placing an array of lenslets over a high-resolution display screen, or projecting the imagery onto an array of lenslets using an array of video projectors. If the latter is combined with an array of video cameras, one can capture and display a time-varying light field. This essentially constitutes a 3D television system (Javidi 2002; Matusik 2004).

Image generation and predistortion of synthetic imagery for holographic stereograms is one of the earliest examples of computed light fields, anticipating and later motivating the geometry used in Levoy and Hanrahan's work (Halle 1991, 1994).

Modern approaches to light field display explore co-designs of optical elements and compressive computation to achieve higher resolutions, increased contrast, wider fields of view, and other benefits (Wetzstein 2012, 2011; Lanman 2011, 2010).

- Glare reduction. Glare arises due to multiple scattering of light inside the camera's body and lens optics and reduces image contrast. While glare has been analyzed in 2D image space (Talvala 2007), it is useful to identify it as a 4D ray-space phenomenon (Raskar 2008). By statistically analyzing the ray-space inside a camera, one can classify and remove glare artifacts. In ray-space, glare behaves as high frequency noise and can be reduced by outlier rejection. Such analysis can be performed by capturing the light field inside the camera, but it results in the loss of spatial resolution. Uniform and non-uniform ray sampling could be used to reduce glare without significantly compromising image resolution (Raskar 2008).

Notes

43 year old Petroleum Engineer Harry from Deep River, usually spends time with hobbies and interests like renting movies, property developers in singapore new condominium and vehicle racing. Constantly enjoys going to destinations like Camino Real de Tierra Adentro.

See also

References

Theory

- Adelson, E.H., Bergen, J.R. (1991). "The Plenoptic Function and the Elements of Early Vision", In Computation Models of Visual Processing, M. Landy and J.A. Movshon, eds., MIT Press, Cambridge, 1991, pp. 3–20.

- Arvo, J. (1994). "The Irradiance Jacobian for Partially Occluded Polyhedral Sources", Proc. ACM SIGGRAPH, ACM Press, pp. 335–342.

- Bolles, R.C., Baker, H. H., Marimont, D.H. (1987). "Epipolar-Plane Image Analysis: An Approach to Determining Structure from Motion", International Journal of Computer Vision, Vol. 1, No. 1, 1987, Kluwer Academic Publishers, pp 7-55.

- Faraday, M., "Thoughts on Ray Vibrations", Philosophical Magazine, S.3, Vol XXVIII, N188, May 1846.

- Gershun, A. (1936). "The Light Field", Moscow, 1936. Translated by P. Moon and G. Timoshenko in Journal of Mathematics and Physics, Vol. XVIII, MIT, 1939, pp. 51–151.

- Gortler, S.J., Grzeszczuk, R., Szeliski, R., Cohen, M. (1996). "The Lumigraph", Proc. ACM SIGGRAPH, ACM Press, pp. 43–54.

- Levoy, M., Hanrahan, P. (1996). "Light Field Rendering", Proc. ACM SIGGRAPH, ACM Press, pp. 31–42.

- Moon, P., Spencer, D.E. (1981). The Photic Field, MIT Press.

- Wigner Distribution Function and Light Fields

Analysis

- G. Wetzstein, I. Ihrke, W. Heidrich (2013) "On Plenoptic Multiplexing and Reconstruction", International Journal of Computer Vision (IJCV), Volume 101, Issue 2, pp. 384–400.

- Ramamoorthi, R., Mahajan, D., Belhumeur, P. (2006). "A First Order Analysis of Lighting, Shading, and Shadows", ACM TOG.

- Zwicker, M., Matusik, W., Durand, F., Pfister, H. (2006). "Antialiasing for Automultiscopic 3D Displays", Eurographics Symposium on Rendering, 2006.

- Ng, R. (2005). "Fourier Slice Photography", Proc. ACM SIGGRAPH, ACM Press, pp. 735–744.

- Durand, F., Holzschuch, N., Soler, C., Chan, E., Sillion, F. X. (2005). "A Frequency Analysis of Light Transport", Proc. ACM SIGGRAPH, ACM Press, pp. 1115–1126.

- Chai, J.-X., Tong, X., Chan, S.-C., Shum, H. (2000). "Plenoptic Sampling", Proc. ACM SIGGRAPH, ACM Press, pp. 307–318.

- Halle, M. (1994) "Holographic Stereograms as Discrete imaging systems", in SPIE Proc. Vol. #2176: Practical Holography VIII, S.A. Benton, ed., pp. 73–84.

- Yu, J., McMillan, L. (2004). "General Linear Cameras", Proc. ECCV 2004, Lecture Notes in Computer Science, pp. 14–27.

Light field cameras

- Marwah, K., Wetzstein, G., Bando, Y., Raskar, R. (2013). "Compressive Light Field Photography using Overcomplete Dictionaries and Optimized Projections", ACM Transactions on Graphics (SIGGRAPH).

- Liang, C.K., Lin, T.H., Wong, B.Y., Liu, C., Chen, H. H. (2008). "Programmable Aperture Photography:Multiplexed Light Field Acquisition", Proc. ACM SIGGRAPH.

- Veeraraghavan, A., Raskar, R., Agrawal, A., Mohan, A., Tumblin, J. (2007). "Dappled Photography: Mask Enhanced Cameras for Heterodyned Light Fields and Coded Aperture Refocusing", Proc. ACM SIGGRAPH.

- Georgiev, T., Zheng, C., Nayar, S., Curless, B., Salesin, D., Intwala, C. (2006). "Spatio-angular Resolution Trade-offs in Integral Photography", Proc. EGSR 2006.

- Kanade, T., Saito, H., Vedula, S. (1998). "The 3D Room: Digitizing Time-Varying 3D Events by Synchronized Multiple Video Streams", Tech report CMU-RI-TR-98-34, December 1998.

- Levoy, M. (2002). Stanford Spherical Gantry.

- Levoy, M., Ng, R., Adams, A., Footer, M., Horowitz, M. (2006). "Light Field Microscopy", ACM Transactions on Graphics (Proc. SIGGRAPH), Vol. 25, No. 3.

- Ng, R., Levoy, M., Brédif, M., Duval, G., Horowitz, M., Hanrahan, P. (2005). "Light Field Photography with a Hand-Held Plenoptic Camera", Stanford Tech Report CTSR 2005-02, April, 2005.

- Wilburn, B., Joshi, N., Vaish, V., Talvala, E., Antunez, E., Barth, A., Adams, A., Levoy, M., Horowitz, M. (2005). "High Performance Imaging Using Large Camera Arrays", ACM Transactions on Graphics (Proc. SIGGRAPH), Vol. 24, No. 3, pp. 765–776.

- Yang, J.C., Everett, M., Buehler, C., McMillan, L. (2002). "A Real-Time Distributed Light Field Camera", Proc. Eurographics Rendering Workshop 2002.

- "The CAFADIS camera"

Light field displays

- Wetzstein, G., Lanman, D., Hirsch, M., Raskar, R. (2012). "Tensor Displays: Compressive Light Field Display using Multilayer Displays with Directional Backlighting", ACM Transactions on Graphics (SIGGRAPH)

- Wetzstein, G., Lanman, D., Heidrich, W., Raskar, R. (2011). "Layered 3D: Tomographic Image Synthesis for Attenuation-based Light Field and High Dynamic Range Displays", ACM Transactions on Graphics (SIGGRAPH)

- Lanman, D., Wetzstein, G., Hirsch, M., Heidrich, W., Raskar, R. (2011). "Polarization Fields: Dynamic Light Field Display using Multi-Layer LCDs", ACM Transactions on Graphics (SIGGRAPH Asia)

- Lanman, D., Hirsch, M. Kim, Y., Raskar, R. (2010). "HR3D: Glasses-free 3D Display using Dual-stacked LCDs High-Rank 3D Display using Content-Adaptive Parallax Barriers", ACM Transactions on Graphics (SIGGRAPH Asia)

- Matusik, W., Pfister, H. (2004). "3D TV: A Scalable System for Real-Time Acquisition, Transmission, and Autostereoscopic Display of Dynamic Scenes", Proc. ACM SIGGRAPH, ACM Press.

- Javidi, B., Okano, F., eds. (2002). Three-Dimensional Television, Video and Display Technologies, Springer-Verlag.

Light field archives

- "The Stanford Light Field Archive"

- "UCSD/MERL Light Field Repository"

- "The HCI Light Field Benchmark"

- "Synthetic Light Field Archive"

Applications

- Heide, F., Wetzstein, G., Raskar, R., Heidrich, W. (2013) "Adaptive Image Synthesis for Compressive Displays", ACM Transactions on Graphics (SIGGRAPH)

- Wetzstein, G., Raskar, R., Heidrich, W. (2011) "Hand-Held Schlieren Photography with Light Field Probes", IEEE International Conference on Computational Photography (ICCP)

- Pérez, F., Marichal, J. G., Rodriguez, J.M. (2008). "The Discrete Focal Stack Transform", Proc. EUSIPCO

- Raskar, R., Agrawal, A., Wilson, C., Veeraraghavan, A. (2008). "Glare Aware Photography: 4D Ray Sampling for Reducing Glare Effects of Camera Lenses", Proc. ACM SIGGRAPH.

- Talvala, E-V., Adams, A., Horowitz, M., Levoy, M. (2007). "Veiling Glare in High Dynamic Range Imaging", Proc. ACM SIGGRAPH.

- Halle, M., Benton, S., Klug, M., Underkoffler, J. (1991). "The UltraGram: A Generalized Holographic Stereogram", SPIE Vol. 1461, Practical Holography V, S.A. Benton, ed., pp. 142–155.

- Zomet, A., Feldman, D., Peleg, S., Weinshall, D. (2003). "Mosaicing New Views: The Crossed-Slits Projection", IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), Vol. 25, No. 6, June 2003, pp. 741–754.

- Vaish, V., Garg, G., Talvala, E., Antunez, E., Wilburn, B., Horowitz, M., Levoy, M. (2005). "Synthetic Aperture Focusing using a Shear-Warp Factorization of the Viewing Transform", Proc. Workshop on Advanced 3D Imaging for Safety and Security, in conjunction with CVPR 2005.

- Rademacher, P., Bishop, G. (1998). "Multiple-Center-of-Projection Images", Proc. ACM SIGGRAPH, ACM Press.

- Isaksen, A., McMillan, L., Gortler, S.J. (2000). "Dynamically Reparameterized Light Fields", Proc. ACM SIGGRAPH, ACM Press, pp. 297–306.

- Buehler, C., Bosse, M., McMillan, L., Gortler, S., Cohen, M. (2001). "Unstructured Lumigraph Rendering", Proc. ACM SIGGRAPH, ACM Press.

- Ashdown, I. (1993). "Near-Field Photometry: A New Approach", Journal of the Illuminating Engineering Society, Vol. 22, No. 1, Winter, 1993, pp. 163–180.

- ↑ The radiance on a ray that hits the object is not equal to the radiance of the light coming from the object on the same ray but on the other side of the object.